There are quite a lot of good documentation available about Docker including the official one, but many of them start explaining lxc, cgroups, Linux kernel and UnionFS in the very first paragraph, thus scaring off many readers who are not Linux geeks.

In this article I will try to explain Docker in layman’s terms. No prior knowledge of virtual machines, cloud deployments or DevOps practices are assumed to understand this post.

Docker?

Docker is an open source platform which can be used to package, distribute and run your applications. Docker provides an easy and efficient way to encapsulate applications (e.g. a Java web application) and any required infrastructure to run that application (e.g. Red hat Linux OS, Apache web server, Tomcat application server, mySQL database etc.) as a single “Docker image” which can then be shared through a central, shared “Docker registry“. The image can then be used to launch a “Docker container” which makes the contained application available from the host where the Docker container is running.

Docker provides some convenient tools to build Docker images in a simple and efficient way. A Docker container on the other hand is a kind of light weight virtual machine with considerably smaller memory and disk space footprint than a full blown virtual machine.

By enabling fast, convenient and automated deployments, Docker has the effect of shortening the cycle between writing code, testing code and getting it live on Production. On the other hand, by providing a light weight container to run the application, Docker enables very efficient utilization of hardware and CPU resources.

Docker is open source and can be installed on your notebook or on any servers where you want to build and run your Docker images and containers (provided you meet the minimum system requirements).

Docker deployment workflow from 1000 feet

Before we look into individual components of the Docker ecosystem, let us try to understand one of the ways, how Docker workflow makes sense in the Software Development Life Cycle.

In-order to support this workflow, we need a CI tool and a configuration management tool. I picked Bamboo and Ansible, even though the workflow would remain the same for any other tools or modes of deployment. Below is a possible workflow:

- Code is committed to Git repository

- A job is triggered by Bamboo to build the application from the source code and run unit/integration tests.

- If tests are successful, Bamboo builds a Docker image which is pushed to a “Docker registry”. (Dockerfile, which is used to provide a template for building the image is typically committed as part of the codebase)

- Bamboo runs an Ansible playbook to deploy the image to QA servers

- If QA tests are passed as well, Ansible can be used to deploy and start the container in production

Docker ecosystem

Docker client and server

Docker is a client-server application. The Docker client talks to a Docker server (also called a daemon), which in turn does all the work. Docker is equipped with a command line client binary called Docker and a full Restful API. Docker client and server could be running on the same host or on different hosts.

Docker images

Images are the building blocks of the Docker world. They are the build part of Docker’s lifecycle. They are built step-by-step using a series of instructions which are typically described in a simple text configuration file called “Dockerfile”. Docker provides a simple text based way of declaring the infrastructure and the environment dependencies.

Docker images are highly portable across hosts and environments. Just like compiled Java code can be run on any operating system where JVM is installed, a Docker image can be run in a Docker container on any host that runs Docker.

Docker registry

Docker registries are the distribution component of Docker. Docker stores the images which you build in a registry. There are two types of registries: public and private. Docker Inc. operates the public registry for images called Docker Hub. You can create an account on the Docker hub and store and share your images, but you have the option of keeping your images in Docker Hub private as well.

It is also possible to create your own registry behind your corporate firewall.

Docker container

If images are the building or packing aspect of Docker, containers are the runtime or execution aspect of Docker. Containers are launched from images and may contain one or more running processes.

Docker borrows the concept of standard shipping container, used to transport goods globally, as a model for its containers. But instead of goods, Docker containers ship software. Each container contains a software image — its cargo — and like its physical counterpart allows a set of operations to be performed. For example, it can be created, started, stopped, restarted and destroyed.

Like a shipping container, Docker doesn’t care about the contents of the container while performing these actions; for example whether a container is a web server, a database or an application server. Each container is loaded the same way as any other container.

Docker also doesn’t care where you ship your container: you can build on your laptop, upload to a registry, then download to a physical or virtual server, test, deploy to a cluster of a dozen Amazon EC2 hosts, and run.

Benefits of Docker

- Efficient hardware utilization: When compared to hypervisor-based virtual machines, Docker containers use less memory, CPU cycles and disk space. This enables more efficient utilization of hardware resources. You can run more containers than virtual machines on a given host, resulting in higher density.

- Security: Docker isolates many aspects of the underlying host from an application running in a container without root privileges. Also a container has a smaller attack surface than a complete virtual machine.

- Consistency and portability: Docker provides a way to standardize application environments and enables portability of the application across environments.

- Fast, Efficient deployment cycle: Docker reduces the cycle time between code being written, code being tested, deployed and used.

Below quote is taken from from official documentation

Your developers write code locally and share their development stack via Docker with their colleagues. When they are ready, they push their code and the stack they are developing onto a test environment and execute any required tests. From the testing environment, you can then push the Docker images into production and deploy your code.

- Ease of use: Docker’s low overhead and quick startup times make running multiple containers less tedious.

- Encourages SOA: Docker is a natural fit for microservices and SOA architectures since each container typically runs a single process or application and you can run multiple containers with little overhead on the same system.

- Segregation of duties: In Docker ecosystem, Developers care about making the application work inside the container and Ops cares about managing those containers.

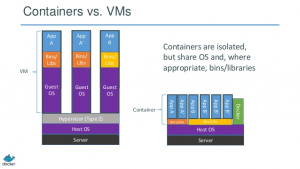

Containers Vs. Virtual machines

- Virtual machines have a full OS with its own memory management, device drivers, daemons, etc. Containers share the host’s OS and are therefore lighter weight.

- Because Containers are lightweight, starting a container takes approximately a second whereas booting a VM can take several minutes

- Containers can generally run only same or similar operating system as the host operating system. e.g., they can run Red Hat on an Ubuntu host, but you can’t run Windows on an Ububtu host. (In practice, for most practical cases it is not a real requirement to run different OS types)

- While using VMs, in theory, vulnerabilities in particular OS versions can’t be leveraged to compromise other VMs running on the same physical host. Since containers share the same kernel, admins and software vendors need to apply special care to avoid security issues from adjacent containers.

Countering this argument is that lightweight containers lack the larger attack surface of the full OS needed by a VM, combined with the potential exposures of the hypervisor itself.

References

- Docker user guide

- Security risks and vulnerabilities of Docker

- Containers Vs. VMs

- Docker: Using Linux Containers to Support Portable Application Deployment

- Contain yourself: The layman’s guide to Docker

- Deploying Java applications with Docker